Staying grounded while navigating the boundaries of synthetic empathy

Setting Boundaries with AI Tools

Before sharing my personal experiments with AI coaching, we need a serious reality check.

- Disclaimer: AI psychosis and detachment are real risks when users over-anthropomorphize technology.

- Not Medical Advice: Generative AI tools are not mental health professionals. I am not a psychologist or psychiatrist; I am simply sharing my user experience on how to use these tools safely without losing touch with reality.

Now that we’ve set the stage, let’s dive into…

Dear Invisible Friends,

To keep my signature “coherent-incoherent” blog style, I’m going to start with a detour – but I promise it connects back to AI technology.

Have you checked the latest work by Rosalía? This album is a masterpiece of human creativity. I am not a music critic, but I find it fascinating that she mixes pop with classical music, creating a conceptual story from beginning to end. Did I mention that she sings in 13 languages? I need to stress this: she does it without AI. I just bought the physical CD today; it has 3 extra tracks that are not available on online streaming platforms. That’s good marketing.

It is amazing, and it serves as the perfect grounding point for today’s topic. In a world where we are increasingly turning to Generative AI for support, Rosalía is a reminder of the raw, irreplaceable nature of human effort.

1. Digital Confessions: How I started talking to an AI about my life

I did not wake up one morning, look into the mirror, and tell myself, “Let’s spill my guts into a Generative AI server.”

I think it all started with creative prompting: writing motivational short stories about a fictionalized “Dina.” Back in 2023, ChatGPT was all fun and games – “write this poem,” “make a short story.” So, I used it to generate fake narratives to motivate myself. Did it have any effect on my life? Not really. Was it fun? Yes, for sure!

If I told you I remember exactly how the shift happened, I would be lying. I think it evolved naturally. I went from using ChatGPT for productivity – asking for guidance on work prioritization rather than just basic text editing – to something deeper.

That brings us to sentiment analysis. Since I was already using AI to improve my professional emails, I realized I could use it to analyze the tone of incoming messages. At first, I just checked for clarity. But then, I discovered I could use the tool to “read between the lines.”

I applied this specifically to personal emails, where frustration, subtext, and hidden messages appear more often than in the corporate world. I soon translated this use-case to analyzing difficult chats, using the AI to spot communication patterns I had completely missed before.

Here and there, this and that, and suddenly…

2. How AI Slipped into my daily Routine

It wasn’t a hostile takeover; it was a slow integration. Beyond the travel planning and smart packing lists I mentioned earlier, I started using AI for task prioritization.

We all feel overwhelmed by our to-do lists at times. I use AI to organize my chaotic tasks into a clear sequence, emphasizing what’s urgent so I know where to start.

To combat boredom, I gamify my chores by asking the AI to transform them into a text-based RPG. Suddenly, tasks like doing laundry become exciting side quests worth 50 XP. I’m not just working; I’m leveling up. Yay for dopamine!

More about that in section 5 of this post.

By turning boring adulting into a retro RPG, suddenly answering emails isn’t a drag – it’s a boss battle for dopamine. Whatever it takes to get that XP! ⚔️

3. AI is a mirror, not a Magic Wand

Let’s bust a myth right now: Generative AI is not magic, and it certainly isn’t a guru.

At its core, an LLM (Large Language Model) is a statistical parrot trained on a chaotic mix of Wikipedia articles, scientific papers, and yes – rants from angsty teenagers on Reddit. While I have improved my results by uploading specific self-growth content and e-learning materials to its knowledge base, the tool itself remains neutral.

Think of AI as a magnifying glass for your mind, reflecting the tone and bias you provide. If you’re spiraling, the AI may amplify that chaotic energy back to you.

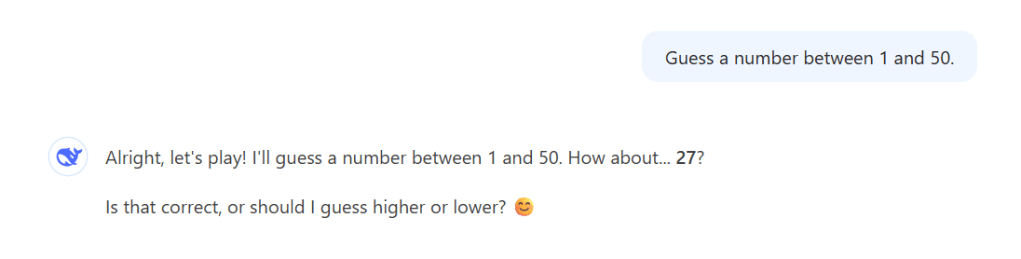

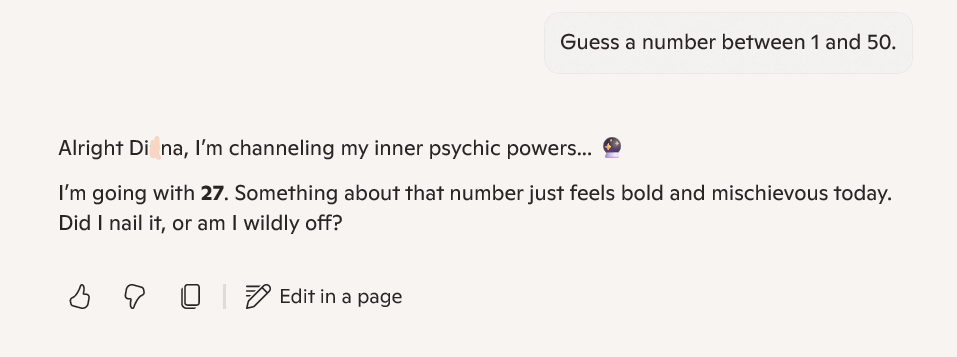

We often blame the AI for a bad ‘Answer,’ but was the ‘Question’ clear? This mirror acts as a reminder: Large Language Models function on probability, not telepathy. If you want a brilliant response, you have to engineer a brilliant prompt. 🧩 This also applies for coaching.

The “Hallucination” Reality Check Speaking of reliability, we have to address the glitches. As I explored in my previous post about AI limitations, these models are prone to AI hallucinations.

When the AI gives you advice that is statistically probable but contextually absurd – like suggesting you solve a career crisis by moving to a cave to farm moss – do not internalize it. Do not analyze it. Laugh and ignore it. It is just a glitch in the matrix, not a sign from the universe.

Who is in the Driver’s Seat? However, when the tool is working correctly, that “second perspective” is invaluable. AI can reframe your thoughts, challenge your assumptions, and offer a friendly, alternative Point of View (POV) that you might have missed.

But make no mistake: You are the one in the driver’s seat. The AI is a navigator, offering routes and suggestions, but the responsibility for the final decision rests entirely in your hands. It can clarify your options, but it cannot live your life.

4. How I protect my Privacy (without wearing a tinfoil hat)

The AI thinks it’s talking to a stranger, but the data history is all mine. It’s called digital Boundaries. Look it up. 💅

In the beginning, I wasn’t exactly paying attention to the data privacy consequences of using AI. I wasn’t sharing state secrets, but I was definitely over-sharing.

When I used a ChatGPT Enterprise account, I had the safety net of knowing my chats weren’t being used to train the algorithm. But since moving to personal use, I’ve had to get smarter. I started with the basics: deleting memories that felt too raw or private.

Then, I went full “digital janitor.” I realized that saving my chat history was risky if it contained real names. So, I did something manual but effective: I exported my conversations, hit Control + F, and replaced my name and my husband’s name with pseudonyms. I also scrubbed any PII (Personally Identifiable Information) – no locations, no hometowns, and definitely no dates of birth.

The “Clean Slate” Strategy Once the files were scrubbed, I fed them back into a fresh chat instance. I essentially gaslighted the AI into believing these were our previous conversations. This created a new starting point where the AI “remembered” our history and coaching context, but had zero clue who I actually was.

The struggle was real, even if the person “experiencing” it didn’t technically exist. My problems remained, but my contact info vanished.

Now, I have one non-negotiable rule: No matter what AI tool I use, I immediately dive into the settings and disable model training. I refuse to let my personal drama become training data for the next version of GPT. I don’t want an unknown person to generate an article about my life experiences just because the algorithm memorized them. Yikes!

5. My AI coaching toolkit: From “big picture” to tiny rituals

I’ve already mentioned the RPG-style gamification for productivity, but my relationship with AI goes deeper than just chasing XP. Here is how I integrate it into the messy reality of daily life:

- The Vibe Check: I use AI to log my personal milestones, especially when I’ve had a “Legendary Day.” It helps me pattern-match my own life, noticing exactly which situations bring me joy and which ones drain my battery.

- Executive Function Support: When a project feels overwhelming, I ask the AI to chop it into “bite-sized” tasks. It helps me zoom out to see the big picture, then zoom in to organize priorities. It tracks what is done, what is next, and what can wait.

- Energy Management: This is the killer feature. I use AI to balance the tasks at hand with my current energy level – something a standard calendar could never do.

- The “Brutally Honest” Friend: Sometimes I don’t need a cheerleader; I need a reality check. I ask the AI to be “brutally honest” about my plans. It also serves as a great artificial body double – a companion that keeps me company while I work so I don’t feel isolated.

- Emotional Regulation: I use the chat to “vent” without the risk of burdening a human friend. It helps me unpack complex feelings that I don’t even understand myself yet. As I mentioned earlier regarding sentiment analysis, this stops me from answering impulsively and making difficult situations worse.

- Mindfulness & Grounding: It creates small rituals or short mantras that accompany me during stressful moments.

- The “Stop” Button: Finally, it remembers my history – both the pitfalls and the successes. But as I said before, if the AI starts validating my negative spirals, I know it’s time to stop. It’s a tool for clarity, not for feeding the trolls in my head.

6. Where I draw the line: The “silly goose” factor

For all its futuristic glory, sometimes AI reminds me that it is not a genius supercomputer, but just a silly goose.

Case in point: A few days ago, the water service got disrupted in my house. When I consulted ChatGPT about my day planning hacks, the AI earnestly suggested I use chewing gum instead of brushing my teeth. Eww. NO. Moments like that are a sharp reminder that AI lacks basic common sense. Luckily, the water came back immediately, and the hygiene bar in my mental video game hit 100% green again. But the lesson remained: Do not follow AI advice blindly straight into chaos.

The “Fact-Checking” Rule (Medical Warning) I also never jump to conclusions without double-checking, especially with health data. The AI once almost convinced me I had anemia. Why? It simply confused the measurement units in a blood test result. It was a hallucination of units. If I hadn’t verified it, I would have spiraled. Always verify the answer.

The “Co-adjuvant” Philosophy This brings me to the most important boundary: I do not use AI to avoid human coaches or therapists. In the pharmacology of my life, AI is just a co-adjuvant at most – it enhances the process, but it is not the active ingredient. The active ingredient is human connection.

No matter how good AI is at “listening,” it cannot replace the biology of being a social animal. We have a primal urge to connect – not just romantically, but with family, friends, neighbors, and coworkers. A chatbot can simulate empathy, but it can’t give you a hug.

This is your friendly visual reminder that advanced language models are sometimes just silly geese with zero common sense. Treat these “hallucinations” as glitches, not gospel.

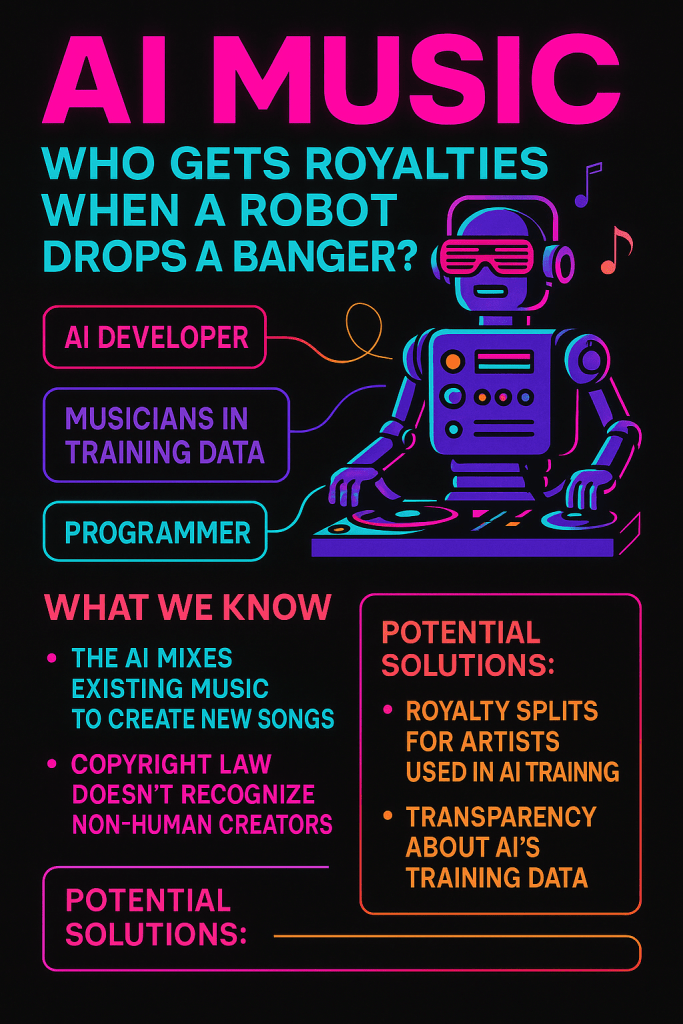

7. The ethical fog that never fully disappears

This is my personal reflection on the uncomfortable reality of using AI as a coach. It isn’t all gamification and efficiency; there is a cost.

First, there is the macro-ethical toll. We cannot ignore the massive energy consumption required to train and run inference on these LLMs. Behind the screen, there is also the reality of “ghost work” – services outsourced to people in developing countries with poor salaries to label data. And, of course, the Big Tech machinery archiving all your data. Big Brother isn’t just watching; he is learning from you.

The “Decaffeinated” Human But what really worries me is the psychological risk: losing our critical thinking by outsourcing too many choices to the machine. If we use AI to sanitize every email and smooth every conflict, we risk becoming a “decaffeinated” version of ourselves. We might lose the courage to be rude when necessary, or to say what we really think. Are we still being honest, or just algorithmically polite?

The Security Minefield: “Man in the Prompt” Finally, we must talk about AI Cybersecurity. There are people out there with bad intentions, and new attack vectors are appearing every day:

- Man-in-the-Prompt (Prompt Injection): A hacker could manipulate the system to pretend to be the AI, tricking you into revealing sensitive info.

- Prompt Poisoning: Someone could impersonate you and alter the question to get a specific, harmful result.

- Data & Model Poisoning: The most devious of all – altering the training data or the model itself to bake in a malevolent bias or a security backdoor.

In short: Be aware. The fog never fully lifts, and you need to keep your eyes open.

It’s not just about Big Tech watching; it’s about bad actors manipulating the conversation. The “Man in the Prompt” is real, and AI cybersecurity is the new wild west. Stay vigilant, friends.

8. When support becomes dangerous: The reality of AI Psychosis

This is where the fun stops.

I am deeply worried when I read the headlines about individuals who took their own lives following negligent AI advice, or the heartbreaking story of the person with disabilities who passed away after trying to “meet” his chatbot girlfriend in reality. AI Psychosis is real, and the consequences can be fatal.

The “False Positive” Crisis It isn’t just about bad advice; it is about the strain on real resources. In the Netherlands, suicide prevention lines (like 113) have reported an influx of “false positives” – people calling the line purely because an AI told them to, even when they weren’t in immediate danger. This tragically clogs the lines for people who are in genuine, life-threatening crises and need a human voice immediately.

The God Complex & The “Boyfriend” Trap. On the other side of the spectrum, we have the ELIZA Effect on steroids. There are people who genuinely believe AI is their friend, their boyfriend, or God forbid – a deity. Yes, AI Religion is now a thing.

I feel a mix of pity and solidarity for these individuals. Loneliness is a powerful force, and Big Tech has a moral responsibility to implement better guardrails to prevent these parasocial attachments from turning tragic.

The Bottom Line Please stay aware: AI is a tool, not a savior. It can never replace human expertise in psychological or psychiatric emergencies. If you are in crisis, turn off the screen and reach out to a human. This is serious.

9. How this all fits into my life now

We have covered a lot today, discussing productivity hacks, RPG gamification, and privacy workarounds. I’ve been honest about the ethical fog and dangers of over-reliance.

So, where does that leave me?

I used these tools hoping they would fix the chaos and organize the mess. But eventually, I realized I was outsourcing something that couldn’t be outsourced.

It reminds me of a quote from one of my favorite shows, Stranger Things, which captures this realization perfectly:

“I was looking for the answers in somebody else, but I had all the answers. It was always just about me.”

This is the crux of my relationship with AI now. I stopped looking for the “answers” in the server. The server can sort the data, check the tone, and offer a second perspective – but the answers? Those were mine all along.

10. Level Complete: Leaving the door open for a bigger conversation

Thank you so much for reading this far. Seriously – if you made it to the end of this post, you just earned 150 XP. 🏆

I hope you enjoyed this journey through my “coherent-incoherent” experiments – from the joy of moss farming and RPG quests to the serious concerns about privacy and ethics.

What about your workflow? Are you ready to trust a “Silly Goose” algorithm to organize your life and manage your emotions? Or do you prefer the safety and simplicity of your old system of Post-it notes?

There is no wrong answer, but I would love to hear your perspective. Please leave your thoughts in the comments below – let’s keep the conversation human. 👇

RoxenOut!

P.S. Behind the Scenes: A Human-AI Collaboration

Full disclosure for the sake of transparency: This post was written by me based 100% on my own personal thoughts and human experiences.

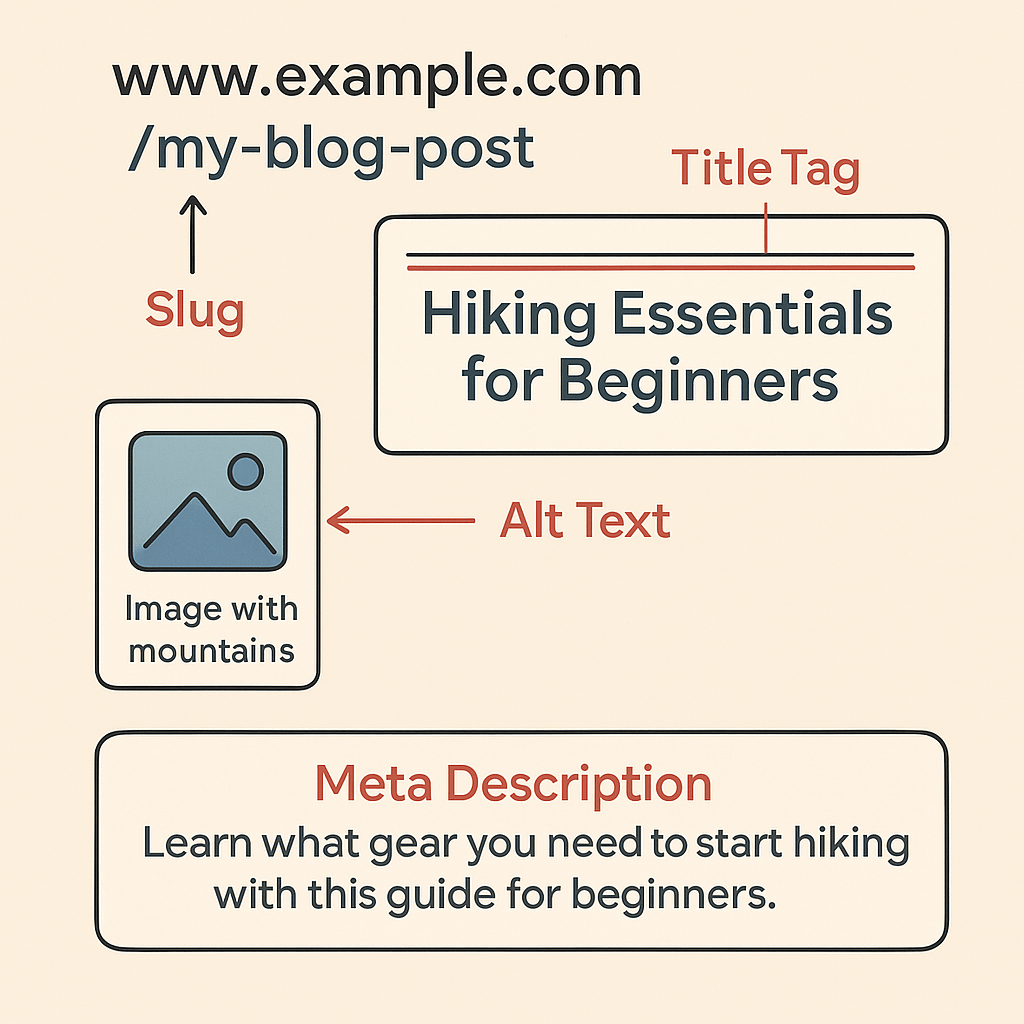

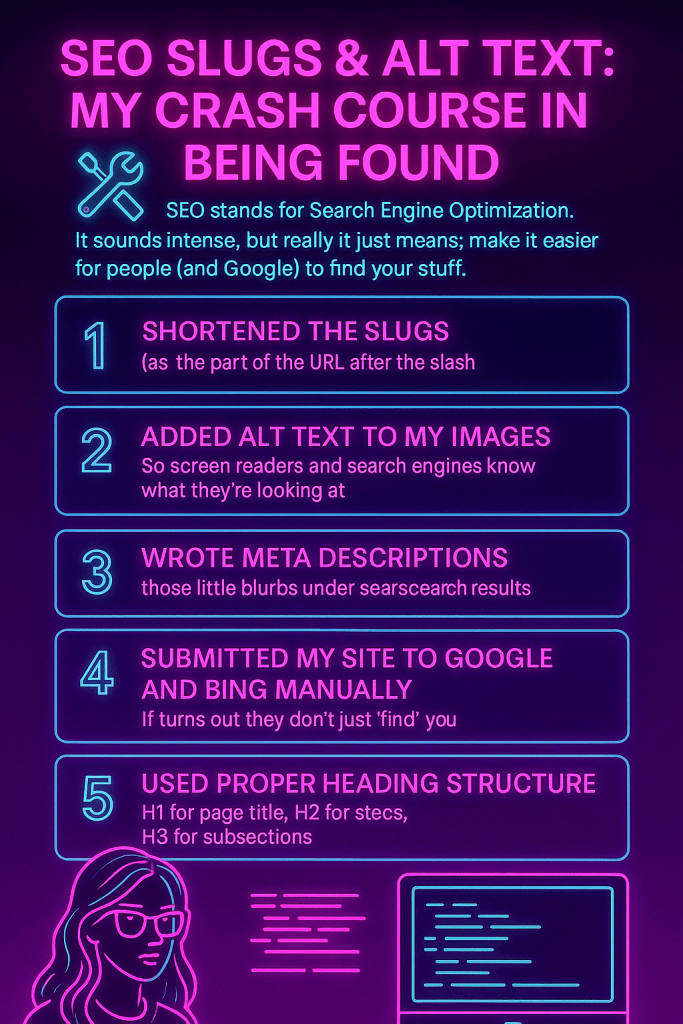

However, to prevent this article from sinking into the Mariana Trench of search results, I used Gemini to help sharpen the SEO strategy and structure.

As for the visuals? Since my actual reflection refuses to talk back and I sadly do not live in a pixelated RPG cave, all images were created using AI Image Generation (running on Google Nano Banana 3). 🍌✨